Difference between revisions of "Description"

| (8 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

Medusa is a device that allows connecting of old computers to modern display devices (monitors, projectors etc.). | Medusa is a device that allows connecting of old computers to modern display devices (monitors, projectors etc.). | ||

== | == Hardware Revisions == | ||

When first home computers appeared people didn't have special computer monitors in their homes, but almost everybody | * REV A (first hardware version) | ||

[[File:Medusa_REVA_top.jpg|640px|frameless|REV A]] | |||

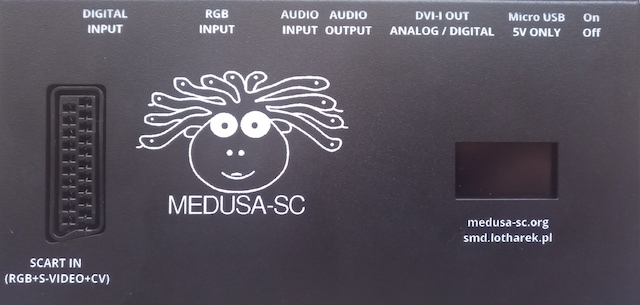

* REV B (second hardware version) | |||

** new db9 socket - 8bit digital input. Ready for supporting C128, CGA, EGA, Apple IIc and others | |||

** moved audio source switching from CPU to FPGA - gives ability to mix both channels using PWM | |||

** added new PLL to generate audio sample frequency (switching between 32k, 44.1k, 48k, 96k, 192k etc.) | |||

** better op-amp that drives audio output jack socket (32ohm headphones can be connected directly, also better audio quality) | |||

** lowered digital audio amplitude (cleaner, not overdriven audio over DVI) | |||

** 5V voltage on DVI passed through Schottky diode (prevents powering Medusa from DVI) | |||

** better input protection on SCART socket (better protection of fragile NCS2564) | |||

[[File:Medusa_REVB_top.jpg|640px|frameless|REV B]] | |||

== A Little History == | |||

When first home computers appeared, people didn't have special computer monitors in their homes, but almost everybody had a standard TV set. This is why first home computers (such as ZX Spectrum, Atari, Commodore) were designed to be connected to standard TV sets. In these times there were different color encoding standards - mainly NTSC, PAL and SECAM. Main differences between those standards were screen refresh rate (60fps for NTSC, 50fps for PAL and SECAM) and way of color signal encoding, but one thing was common: horizontal refresh rate (about 15kHz in all standards). These two values - horizontal refresh rate and screen or vertical refresh rate - define number of lines per screen and therefore maximum vertical resolution. In 50fps systems there were about 312 lines per screen and in 60fps systems even less (about 262 lines). In times of 8-bit computers resolution such as 320x200 was considered "hi-res", so it was absolutely enough. In 16-bit computers it was still ok but users started to want more. Atari, for its Atari ST model, introduced a special hi-res monitor (with 70fps and about 31.5kHz horizontal refresh rate, with resolution 640x400), in PC's they introduced EGA standard (21.8kHz and resolutions up to 640x350), and couple years later VGA with 31.5kHz horizontal refresh rate. At the beginning every video standard (computer or graphic card) needed a dedicated monitor. The problem was that users didn't want to buy a new monitor every time they changed their graphics adapter. In 1989 in America VESA organisation was incorporated to introduce video standards. The only problem was that when they started defining their standards, there existed already Super VGA with resolution of 800x600 and nobody wanted to use old 15kHz resolutions anymore. Therefore the lowest available "standard" resolution defined by VESA was 640x350 with horizontal refresh rate 31.5kHz, so this was the lowest horizontal refresh rate accepted by almost all monitors. The other problem is also vertical (or screen) refresh rate. Minimal refresh rate defined by VESA is 60Hz (60fps). This is why most modern monitors don't accept lower horizontal refresh rate than 31.5kHz. With vertical refresh rate it is a bit better, because introduction of HDMI compatibility, with its TV standards, forced 50Hz, so the majority of monitors that have a HDMI port should accept 50Hz. | |||

== Problems with old computers == | == Problems with old computers == | ||

As | As you can see when one wants to connect an old computer to a modern display, one can encounter problems like: | ||

* color decoding in PAL/NTSC signal (in case of Composite Video or S-Video signal) | * color decoding in PAL/NTSC signal (in case of Composite Video or S-Video signal), | ||

* | * finding exact pixel clock frequency, | ||

* double horizontal refresh rate (when we double 15.5kHz we are close enough to be accepted by modern | * double horizontal refresh rate (when we double 15.5kHz we are close enough to be accepted by modern displays), | ||

* some modern displays don't have analog input - only digital | * some modern displays don't have analog input - only digital. | ||

== Solution == | == Solution == | ||

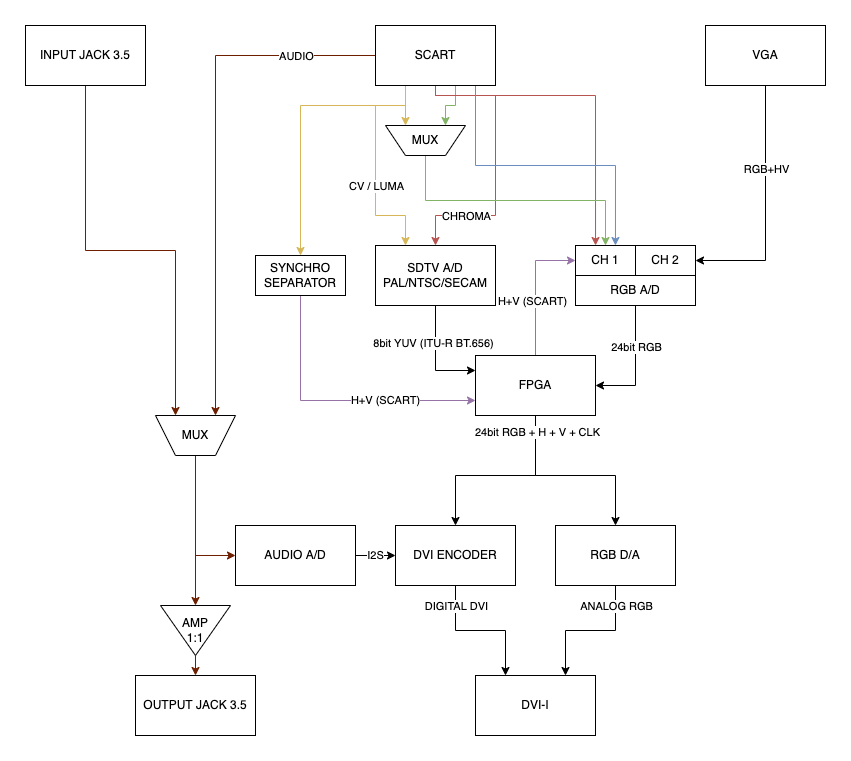

* The first problem to solve is | * The first problem to solve is decoding PAL or NTSC signal (some old computers don't have RGB output). To achieve that Medusa uses specialised video decoder, an integrated circuit created by Analog Devices, that can sample and decode SDTV signal (PAL, NTSC or even SECAM). | ||

* The second problem is to find exact pixel clock for input signal (for RGB signals). There are no ultimate solution for this. In Medusa you can always manually define number of pixels per line, but to achieve that more automatically we measure specific signal "fingerprint" (based on synchro signals) and pick one from | * The second problem is to find the exact pixel clock for the input signal (for RGB signals). There are no ultimate solution for this. In Medusa you can always manually define number of pixels per line, but to achieve that more automatically we measure a specific signal "fingerprint" (based on synchro signals) and pick one from dozens of available pre-set options. | ||

* The third problem is to double the horizontal refresh rate. To achieve that every line from input is put into small memory block inside FPGA chip and then emitted twice. This is why sometimes we call such devices "scan doublers". This is of course done only when necessary (input horizontal refresh rate is lower than 31kHz). | * The third problem is to double the horizontal refresh rate. To achieve that every line from input is put into a small memory block inside an FPGA chip and then emitted twice. This is why sometimes we call such devices "scan doublers". This is of course done only when necessary (input horizontal refresh rate is lower than 31kHz). | ||

* The last problem is to output signal in digital form. In Medusa we decided to use DVI-I standard. DVI standard is theoretically obsoleted by HDMI, but since HDMI is licensed and DVI not, we decided to use DVI. Also DVI in full version (which is in Medusa) also outputs all signals in analog format. This is very convenient because it allows | * The last problem is to output a signal in digital form. In Medusa we decided to use DVI-I standard. DVI standard is theoretically obsoleted by HDMI, but since HDMI is licensed and DVI not, we decided to use DVI. Also DVI in full version (which is implemented in Medusa) also outputs all signals in analog format. This is very convenient because it allows the use of older 17" and 19" LCD panels, that are very cheap and have better screen proportions for old computers than new TV's. At the same time, using a simple cable, one can also connect it to an HDMI device. | ||

== Why Medusa ? == | == Why Medusa? == | ||

This is actually very good question. There are a lot of devices that do similar things. The only problem is ... I've tried almost all of them (FrameMeister included). They all lacked something. | This is actually a very good question. There are a lot of devices that do similar things. The only problem is ... I've tried almost all of them (FrameMeister included). They all lacked something. | ||

* Cheap Chinese devices | * Cheap Chinese devices use chips designed for TV's, with a lot of features that are good in case of movies but unacceptable in case of computers (few frames of latency). Also picture quality is horrible - RGB signal is sampled with constant ratio - not in sync with original pixels. They can't accept anything other than PAL or NTSC-like signals (forget about ST-mono or Amiga-AGA funny resolutions). | ||

* FrameMeister - very good device, but expensive. It also | * FrameMeister - a very good device, but expensive. It also accepts only PAL and NTSC-like resolution (no ST-mono etc.). | ||

* OSSC - also very good device, but without composite video and s-video inputs. Only good for RGB signals. | * OSSC - also a very good device, but without composite video and s-video inputs. Only good for RGB signals. Tough to configure. | ||

So what can do | So what can Medusa do? | ||

* Accept CVBS signal (Composite Video) on SCART (or JP21 - special JP21 version) | * Accept CVBS signal (Composite Video) on SCART (or JP21 - special JP21 version) | ||

* Accept S-Video signal on SCART (or JP21) | * Accept S-Video signal on SCART (or JP21) | ||

* Accept RGB signal on SCART or VGA input (with more than 50 predefined settings | * Accept RGB signal on SCART or VGA input (with more than 50 predefined settings for popular old computers; it can work not only with ST-mono, but also Viking card emulated by MIST - yes 1280x1024 !!!; more modes will be added with future firmware updates) | ||

* Encode audio can be switched on and (using DVI-HDMI adapter and HDMI capable display) audio signal from SCART (or 3.5mm jack) is digitised and mixed with video | * Encode audio on DVI; this option can be switched on and (using DVI-HDMI adapter and HDMI capable display) audio signal from SCART (or 3.5mm jack) is digitised and mixed with video | ||

* Allows user to change a lot of settings on the fly using OSD or | * Allows user to change a lot of settings on the fly using OSD or built-in OLED display (settings such as contrast, brightness, X/Y picture shift etc.). | ||

* Output signal analog and digital at the same time on DVI-I, so it can be connected both to old VGA monitors (also CRT ones) | * Output signal analog and digital at the same time on DVI-I, so it can be connected both to old VGA monitors (also CRT ones) as well as modern HDMI monitors or TV's. | ||

* Easy firmware update (no special device is needed - just connect Medusa via USB to computer and run updater | * Easy firmware update (no special device is needed - just connect Medusa via USB to a computer and run binary updater - available for Win,Linux,MacOS) | ||

Why Medusa is not perfect? :) | |||

Because a perfect solution does not exist. Medusa doesn't change vertical refresh rate, so if your monitor can't accept 50Hz then it will not display video from Medusa. Most monitors with HDMI inputs are able to display 50Hz (because of TV standards), but that's not not always the case with old VGA LCD panels. I have good experiences with LG's and NEC's, but can't guarantee that a particular monitor will accept 50Hz. Probably at some point we will prepare a list of monitors that can do that. With such approach to screen conversion (simple line doubling) any resolution change on input will also lead to resolution change on output. Even small change will usually cause a black screen on the displaying device (for about 0.5 - 1 second). This is normal - this is how displaying devices work. The only way to circumvent that would be to constantly display a picture in a chosen resolution (for example 1920x1080@60) and sample pixels from input and put them in a displayed framebuffer. But in this approach a lot of problems would occur. For example all 50Hz scrolls would be jagged. This is why we decided not to do that. Also the device would be much more expensive. | |||

== Internals of Medusa == | |||

[[File:Medusa_internals.png|851px|frameless|block diagram of Medusa]] | |||

Latest revision as of 11:48, 22 November 2023

What is Medusa ?

Medusa is a device that allows connecting of old computers to modern display devices (monitors, projectors etc.).

Hardware Revisions

- REV A (first hardware version)

- REV B (second hardware version)

- new db9 socket - 8bit digital input. Ready for supporting C128, CGA, EGA, Apple IIc and others

- moved audio source switching from CPU to FPGA - gives ability to mix both channels using PWM

- added new PLL to generate audio sample frequency (switching between 32k, 44.1k, 48k, 96k, 192k etc.)

- better op-amp that drives audio output jack socket (32ohm headphones can be connected directly, also better audio quality)

- lowered digital audio amplitude (cleaner, not overdriven audio over DVI)

- 5V voltage on DVI passed through Schottky diode (prevents powering Medusa from DVI)

- better input protection on SCART socket (better protection of fragile NCS2564)

A Little History

When first home computers appeared, people didn't have special computer monitors in their homes, but almost everybody had a standard TV set. This is why first home computers (such as ZX Spectrum, Atari, Commodore) were designed to be connected to standard TV sets. In these times there were different color encoding standards - mainly NTSC, PAL and SECAM. Main differences between those standards were screen refresh rate (60fps for NTSC, 50fps for PAL and SECAM) and way of color signal encoding, but one thing was common: horizontal refresh rate (about 15kHz in all standards). These two values - horizontal refresh rate and screen or vertical refresh rate - define number of lines per screen and therefore maximum vertical resolution. In 50fps systems there were about 312 lines per screen and in 60fps systems even less (about 262 lines). In times of 8-bit computers resolution such as 320x200 was considered "hi-res", so it was absolutely enough. In 16-bit computers it was still ok but users started to want more. Atari, for its Atari ST model, introduced a special hi-res monitor (with 70fps and about 31.5kHz horizontal refresh rate, with resolution 640x400), in PC's they introduced EGA standard (21.8kHz and resolutions up to 640x350), and couple years later VGA with 31.5kHz horizontal refresh rate. At the beginning every video standard (computer or graphic card) needed a dedicated monitor. The problem was that users didn't want to buy a new monitor every time they changed their graphics adapter. In 1989 in America VESA organisation was incorporated to introduce video standards. The only problem was that when they started defining their standards, there existed already Super VGA with resolution of 800x600 and nobody wanted to use old 15kHz resolutions anymore. Therefore the lowest available "standard" resolution defined by VESA was 640x350 with horizontal refresh rate 31.5kHz, so this was the lowest horizontal refresh rate accepted by almost all monitors. The other problem is also vertical (or screen) refresh rate. Minimal refresh rate defined by VESA is 60Hz (60fps). This is why most modern monitors don't accept lower horizontal refresh rate than 31.5kHz. With vertical refresh rate it is a bit better, because introduction of HDMI compatibility, with its TV standards, forced 50Hz, so the majority of monitors that have a HDMI port should accept 50Hz.

Problems with old computers

As you can see when one wants to connect an old computer to a modern display, one can encounter problems like:

- color decoding in PAL/NTSC signal (in case of Composite Video or S-Video signal),

- finding exact pixel clock frequency,

- double horizontal refresh rate (when we double 15.5kHz we are close enough to be accepted by modern displays),

- some modern displays don't have analog input - only digital.

Solution

- The first problem to solve is decoding PAL or NTSC signal (some old computers don't have RGB output). To achieve that Medusa uses specialised video decoder, an integrated circuit created by Analog Devices, that can sample and decode SDTV signal (PAL, NTSC or even SECAM).

- The second problem is to find the exact pixel clock for the input signal (for RGB signals). There are no ultimate solution for this. In Medusa you can always manually define number of pixels per line, but to achieve that more automatically we measure a specific signal "fingerprint" (based on synchro signals) and pick one from dozens of available pre-set options.

- The third problem is to double the horizontal refresh rate. To achieve that every line from input is put into a small memory block inside an FPGA chip and then emitted twice. This is why sometimes we call such devices "scan doublers". This is of course done only when necessary (input horizontal refresh rate is lower than 31kHz).

- The last problem is to output a signal in digital form. In Medusa we decided to use DVI-I standard. DVI standard is theoretically obsoleted by HDMI, but since HDMI is licensed and DVI not, we decided to use DVI. Also DVI in full version (which is implemented in Medusa) also outputs all signals in analog format. This is very convenient because it allows the use of older 17" and 19" LCD panels, that are very cheap and have better screen proportions for old computers than new TV's. At the same time, using a simple cable, one can also connect it to an HDMI device.

Why Medusa?

This is actually a very good question. There are a lot of devices that do similar things. The only problem is ... I've tried almost all of them (FrameMeister included). They all lacked something.

- Cheap Chinese devices use chips designed for TV's, with a lot of features that are good in case of movies but unacceptable in case of computers (few frames of latency). Also picture quality is horrible - RGB signal is sampled with constant ratio - not in sync with original pixels. They can't accept anything other than PAL or NTSC-like signals (forget about ST-mono or Amiga-AGA funny resolutions).

- FrameMeister - a very good device, but expensive. It also accepts only PAL and NTSC-like resolution (no ST-mono etc.).

- OSSC - also a very good device, but without composite video and s-video inputs. Only good for RGB signals. Tough to configure.

So what can Medusa do?

- Accept CVBS signal (Composite Video) on SCART (or JP21 - special JP21 version)

- Accept S-Video signal on SCART (or JP21)

- Accept RGB signal on SCART or VGA input (with more than 50 predefined settings for popular old computers; it can work not only with ST-mono, but also Viking card emulated by MIST - yes 1280x1024 !!!; more modes will be added with future firmware updates)

- Encode audio on DVI; this option can be switched on and (using DVI-HDMI adapter and HDMI capable display) audio signal from SCART (or 3.5mm jack) is digitised and mixed with video

- Allows user to change a lot of settings on the fly using OSD or built-in OLED display (settings such as contrast, brightness, X/Y picture shift etc.).

- Output signal analog and digital at the same time on DVI-I, so it can be connected both to old VGA monitors (also CRT ones) as well as modern HDMI monitors or TV's.

- Easy firmware update (no special device is needed - just connect Medusa via USB to a computer and run binary updater - available for Win,Linux,MacOS)

Why Medusa is not perfect? :)

Because a perfect solution does not exist. Medusa doesn't change vertical refresh rate, so if your monitor can't accept 50Hz then it will not display video from Medusa. Most monitors with HDMI inputs are able to display 50Hz (because of TV standards), but that's not not always the case with old VGA LCD panels. I have good experiences with LG's and NEC's, but can't guarantee that a particular monitor will accept 50Hz. Probably at some point we will prepare a list of monitors that can do that. With such approach to screen conversion (simple line doubling) any resolution change on input will also lead to resolution change on output. Even small change will usually cause a black screen on the displaying device (for about 0.5 - 1 second). This is normal - this is how displaying devices work. The only way to circumvent that would be to constantly display a picture in a chosen resolution (for example 1920x1080@60) and sample pixels from input and put them in a displayed framebuffer. But in this approach a lot of problems would occur. For example all 50Hz scrolls would be jagged. This is why we decided not to do that. Also the device would be much more expensive.